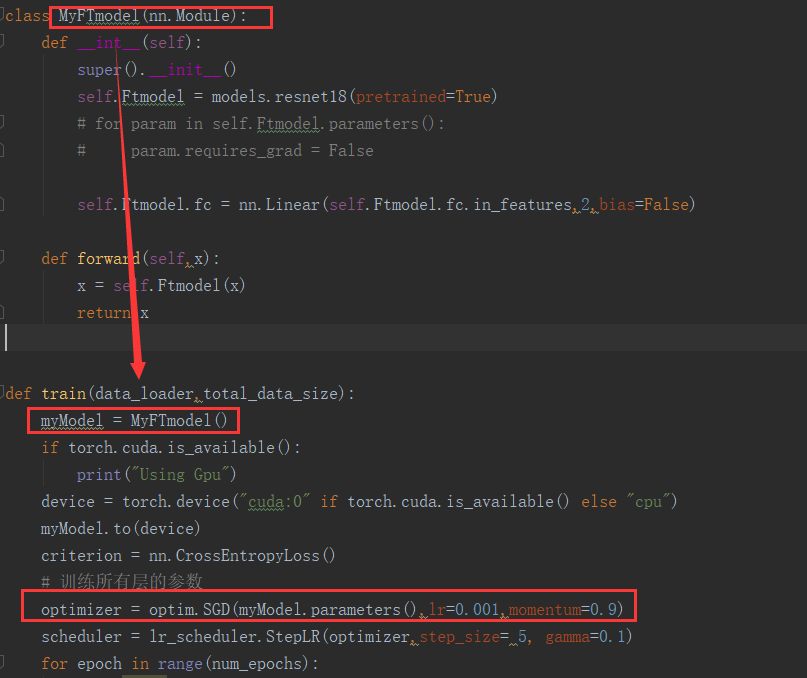

machine learning - PyTorch optimizer not reading parameters from my Model class dict - Stack Overflow

torch.optim.lr_scheduler.SequentialLR` doesn't have an `optimizer` attribute · Issue #67318 · pytorch/pytorch · GitHub

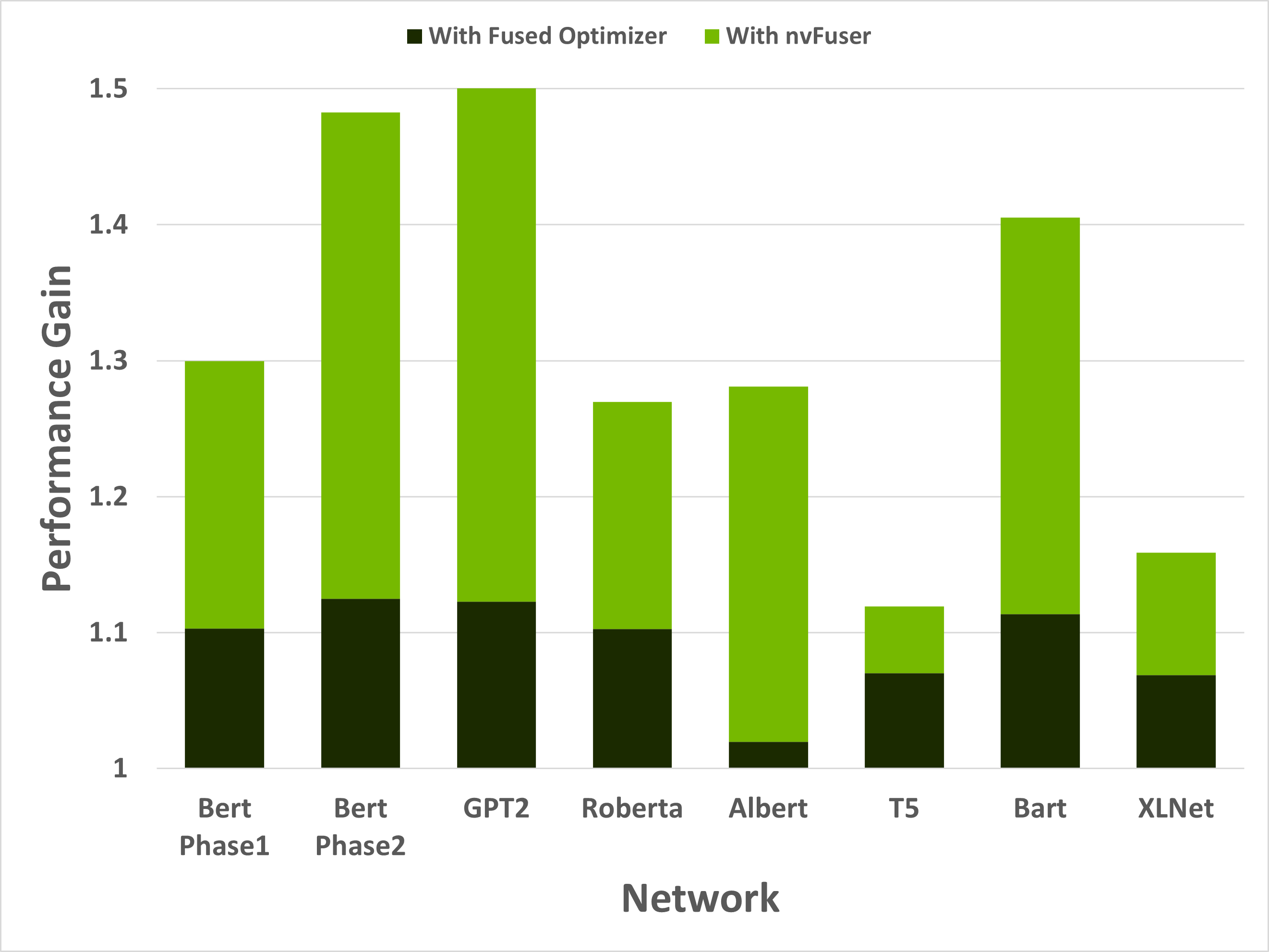

AssemblyAI on X: "PyTorch 2.0 was announced! Main new feature: torch.compile A compiled mode that accelerates your model without needing to change your model code. It can speed up training by 38-76%,

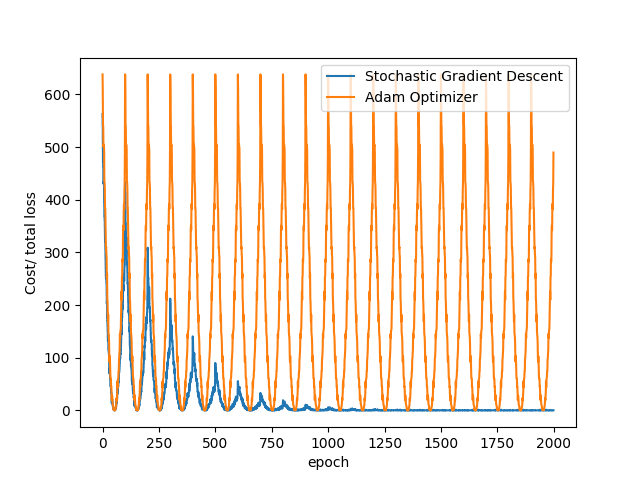

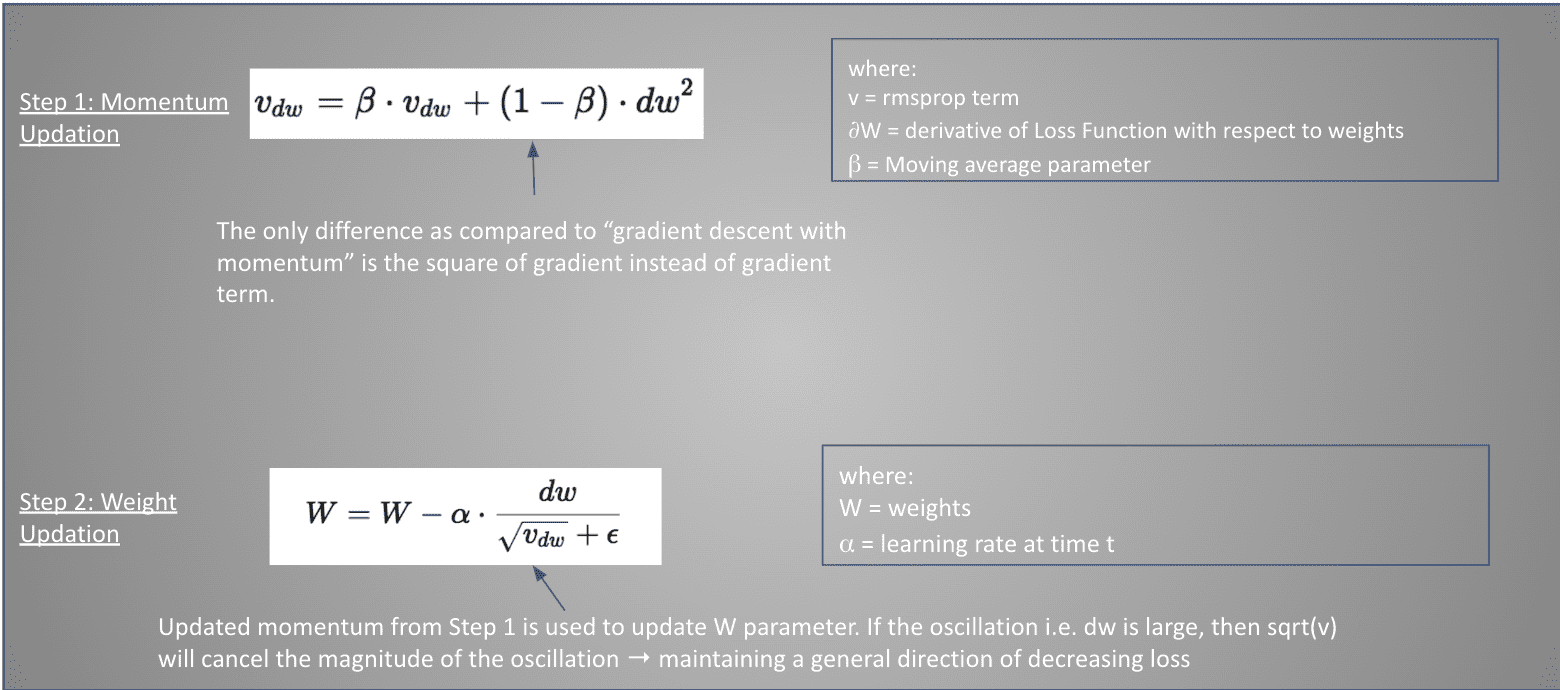

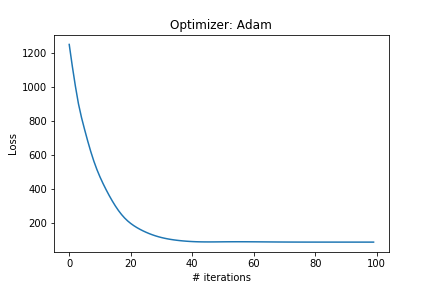

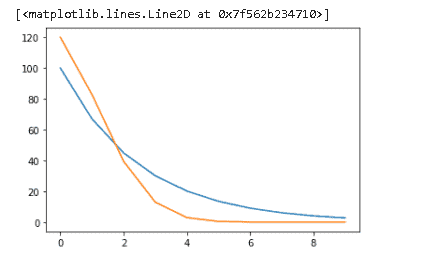

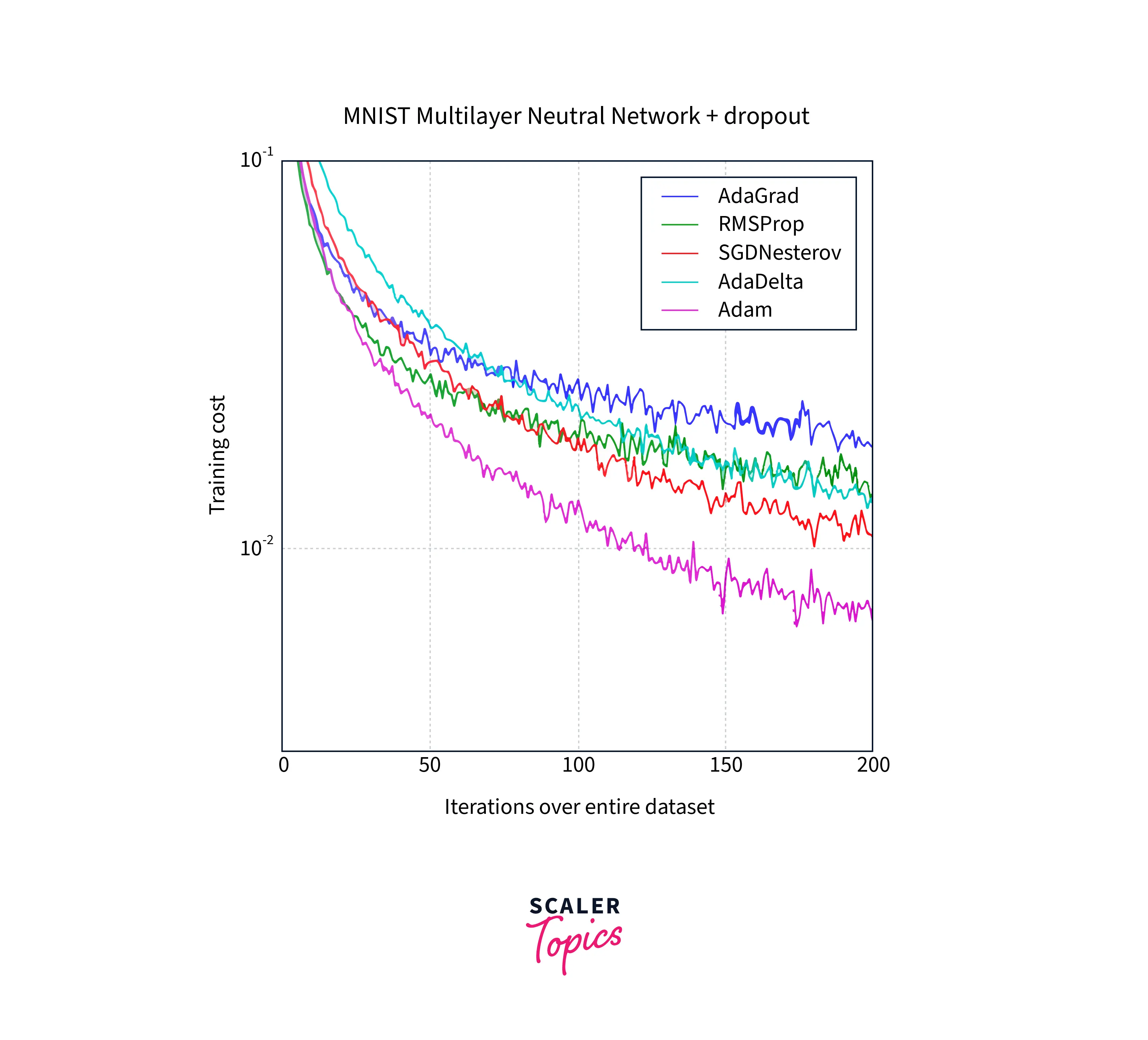

Loss jumps abruptly when I decay the learning rate with Adam optimizer in PyTorch - Artificial Intelligence Stack Exchange