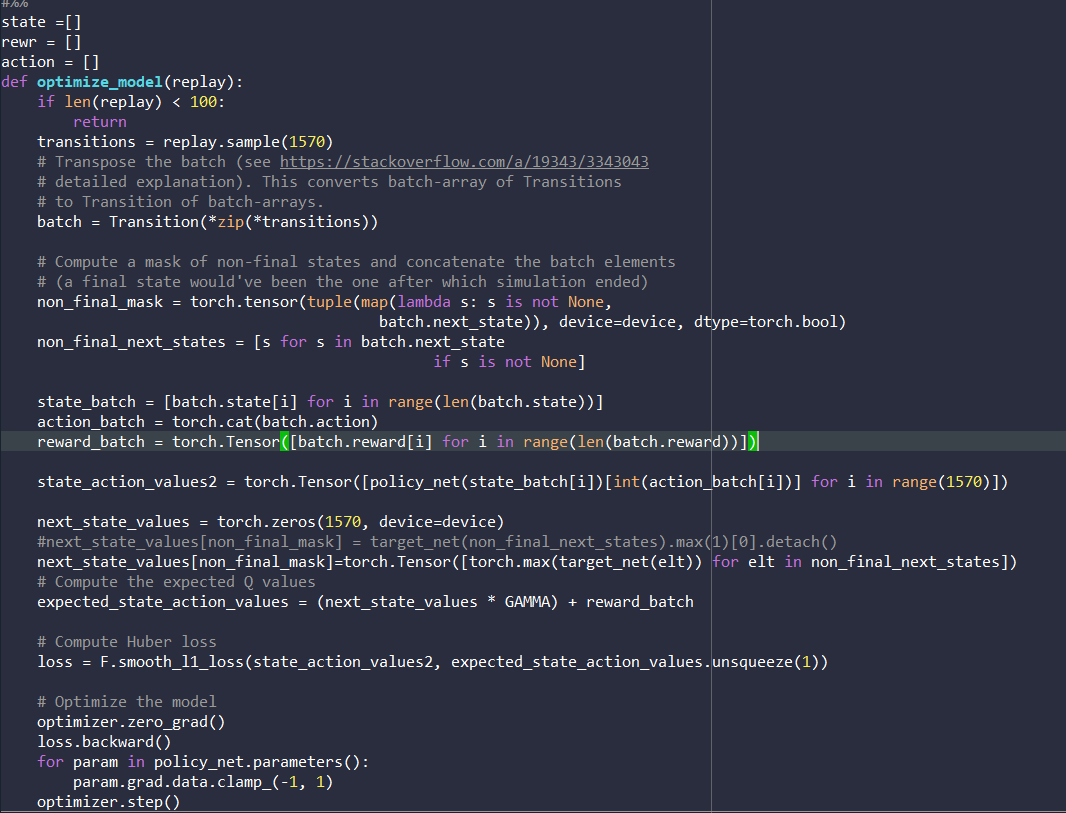

Pytorch 1.12.1 RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation - autograd - PyTorch Forums

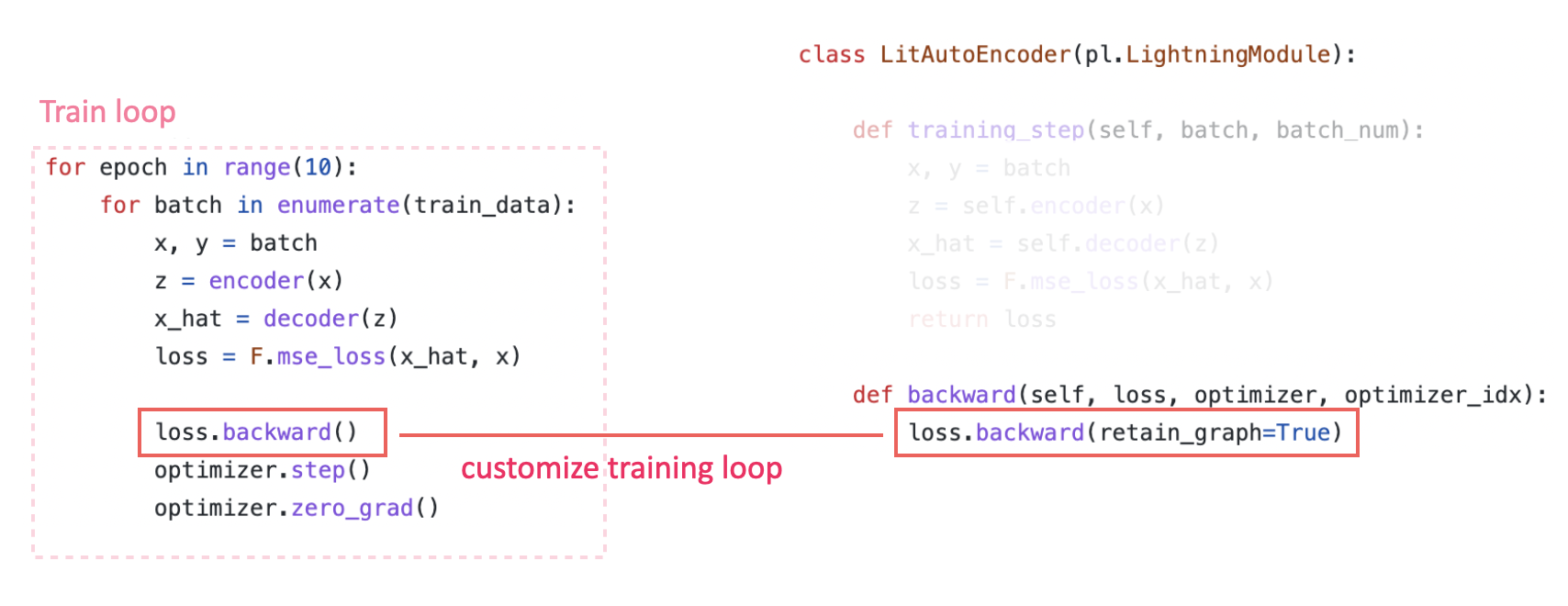

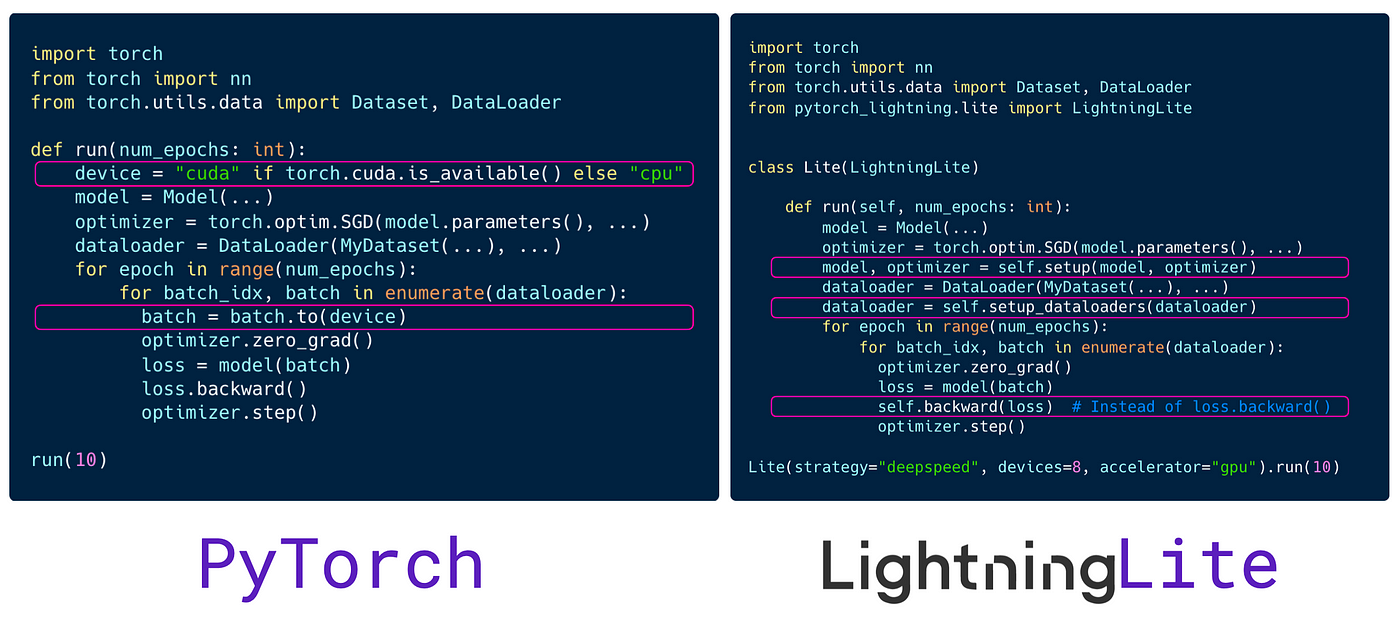

Scale your PyTorch code with LightningLite | by PyTorch Lightning team | PyTorch Lightning Developer Blog

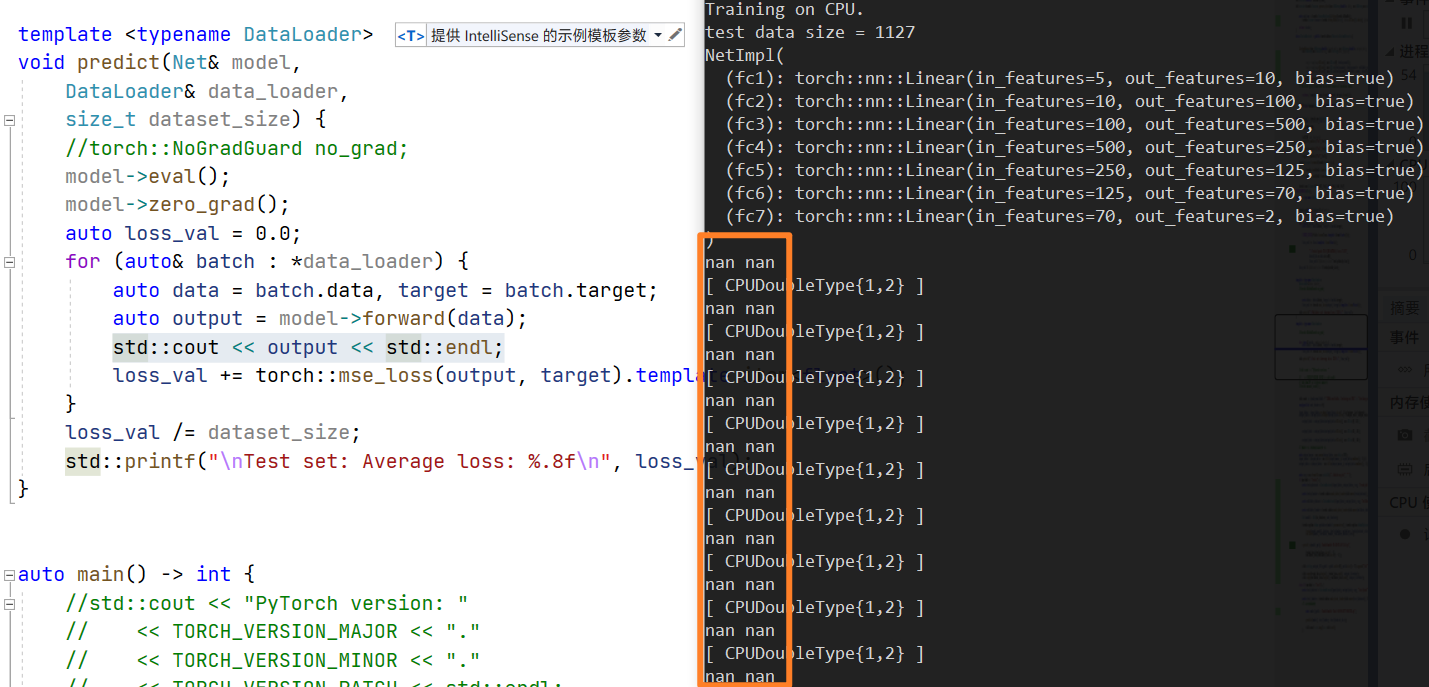

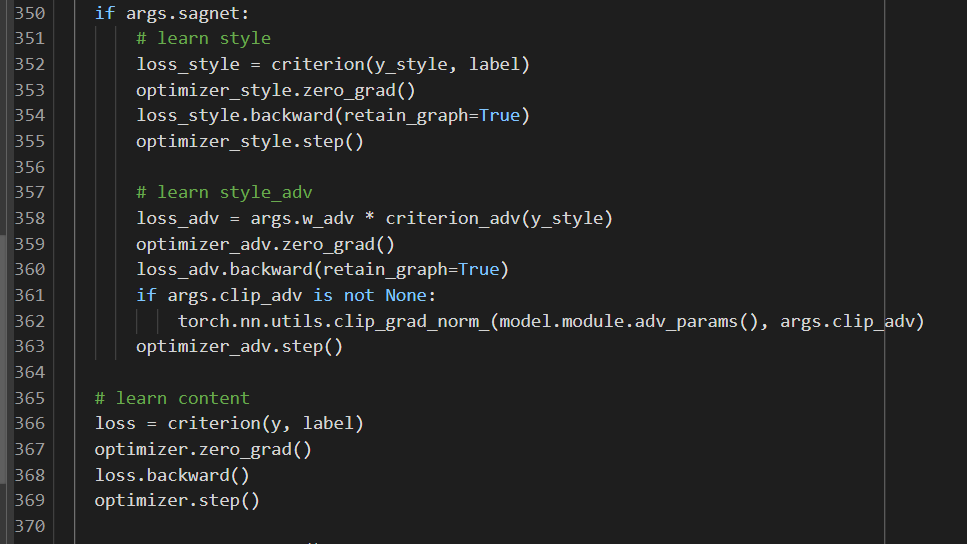

Trying to backward through the graph a second time, but the saved intermediate results have already been freed. Specify retain_graph=True when calling backward the first time - torch.package / torch::deploy - PyTorch

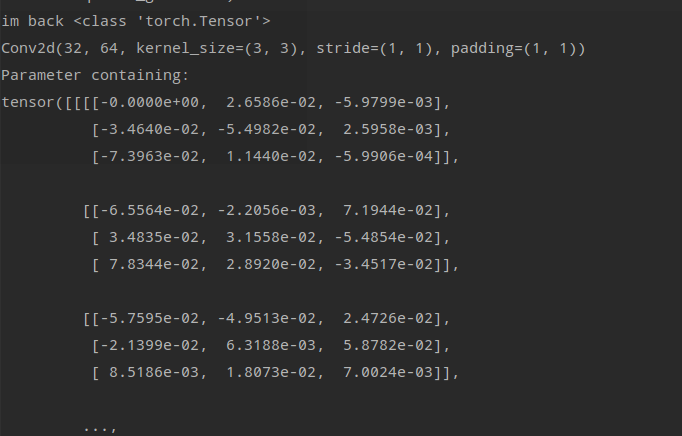

How to freeze or fix the specific(subset, partial) weight in convolution filter - autograd - PyTorch Forums

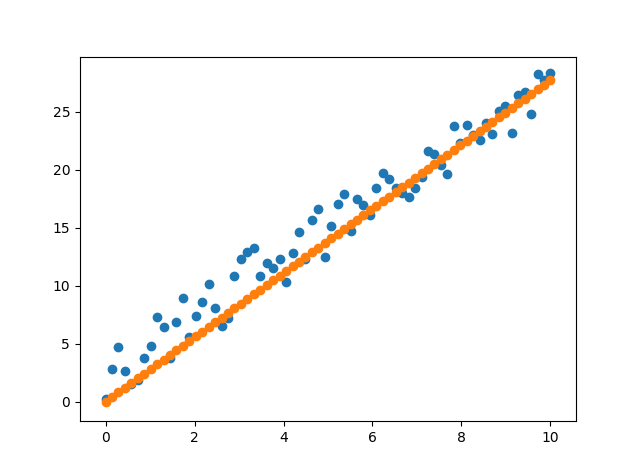

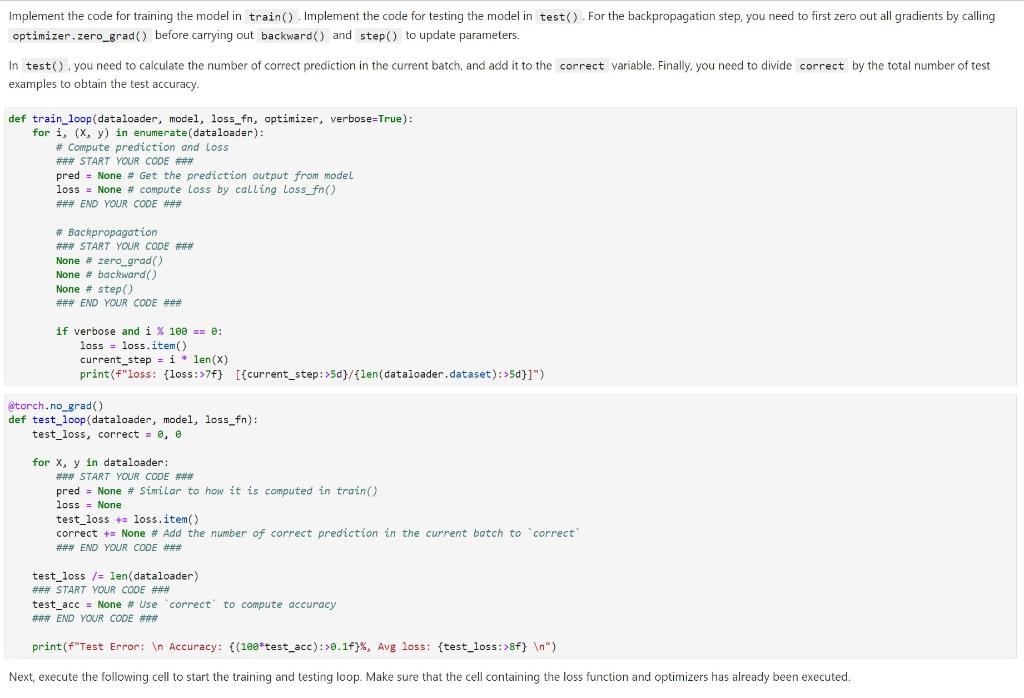

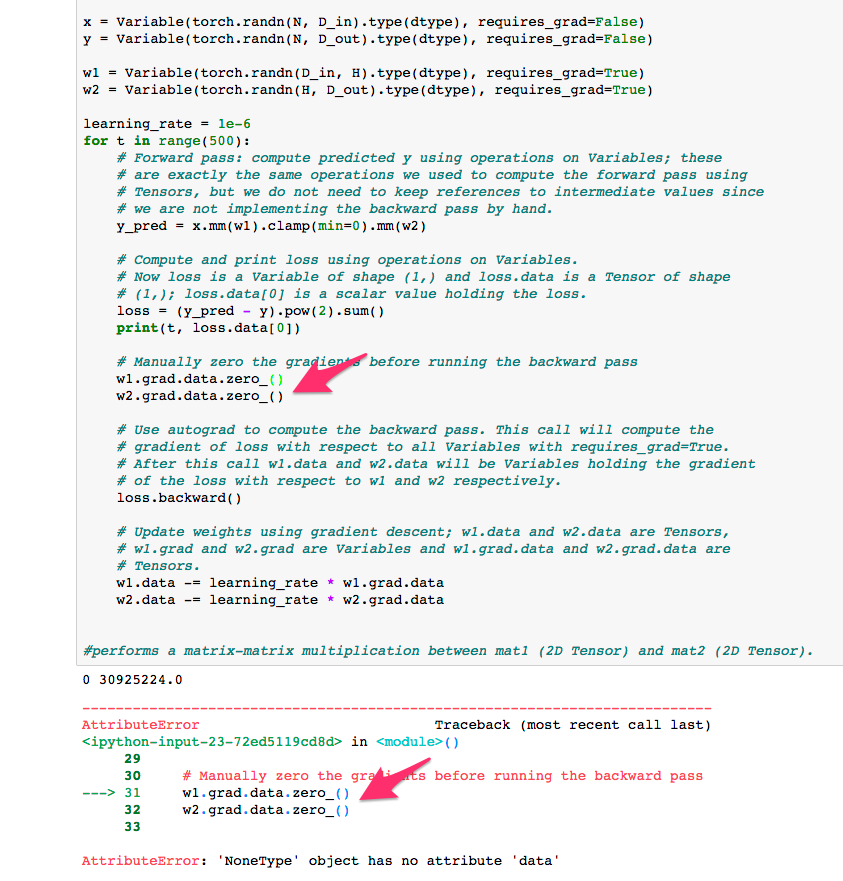

![PyTorch] 3. Tensor vs Variable, zero_grad(), Retrieving value from Tensor | by jun94 | jun-devpBlog | Medium PyTorch] 3. Tensor vs Variable, zero_grad(), Retrieving value from Tensor | by jun94 | jun-devpBlog | Medium](https://miro.medium.com/v2/resize:fit:908/1*q_GojplgLYhqIqKcntvVRw.png)