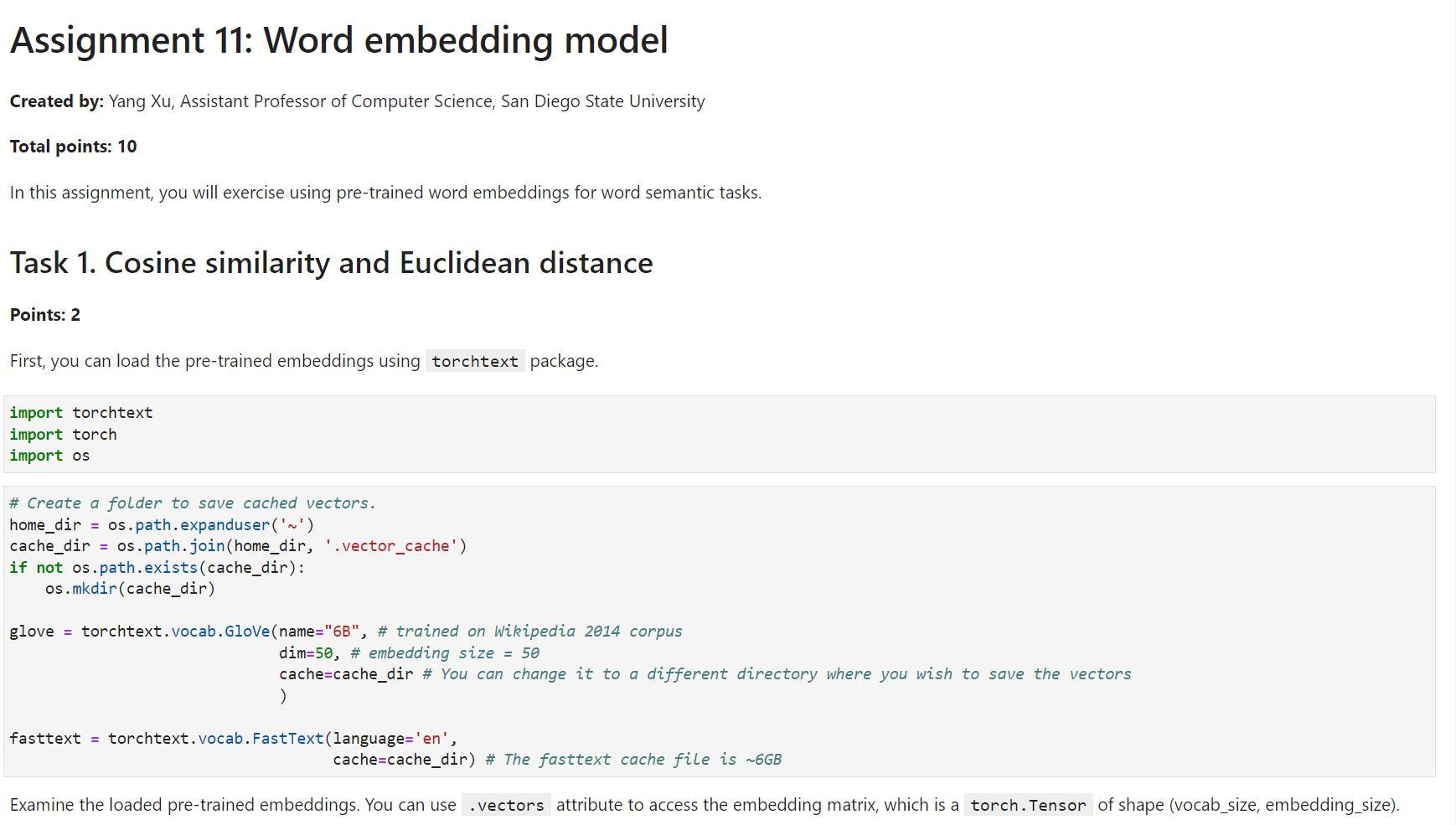

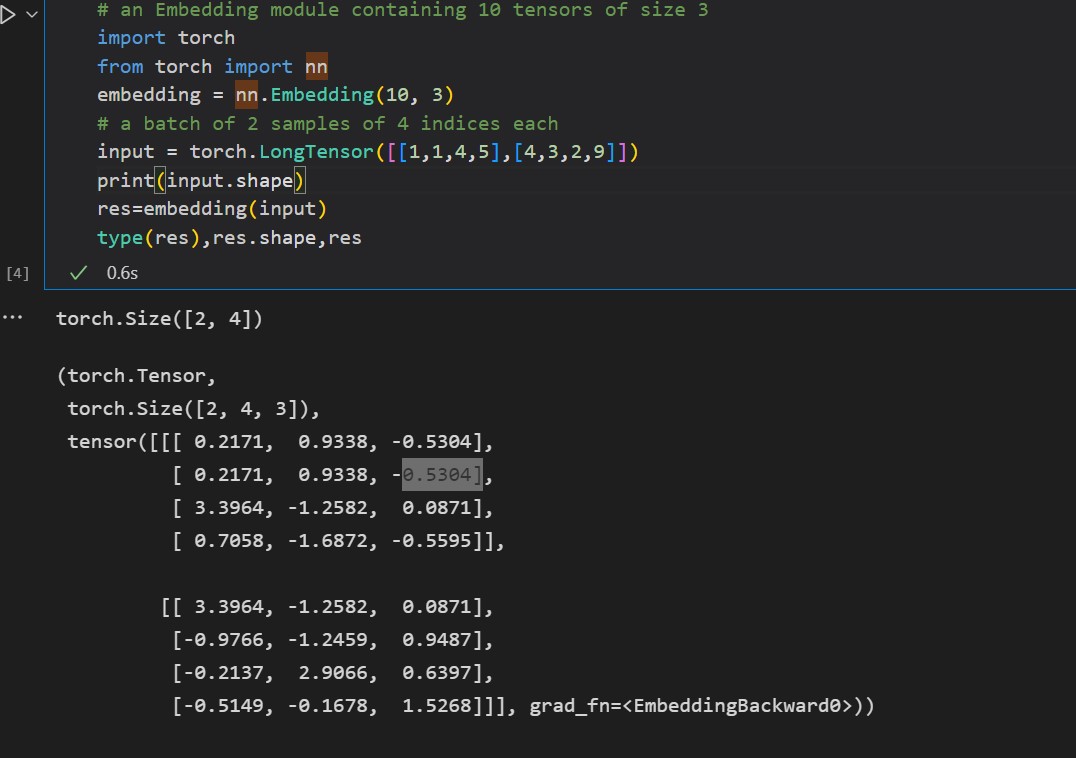

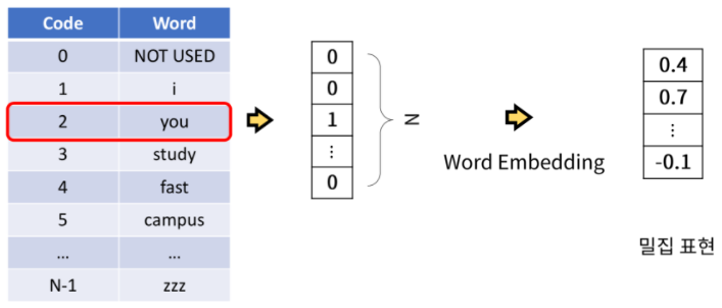

How to use nn.Embedding (pytorch). Numericalization and vectorization of words. - Basics of control engineering, this and that

The Secret to Improved NLP: An In-Depth Look at the nn.Embedding Layer in PyTorch | by Will Badr | Towards Data Science

Training Larger and Faster Recommender Systems with PyTorch Sparse Embeddings | by Bo Liu | NVIDIA Merlin | Medium

.png)