pytorch-deep-learning/08_pytorch_paper_replicating.ipynb at main · mrdbourke/pytorch-deep-learning · GitHub

Can't convert nn.multiheadAttetion(q,k,v) to Onnx when key isn't equal to value · Issue #78060 · pytorch/pytorch · GitHub

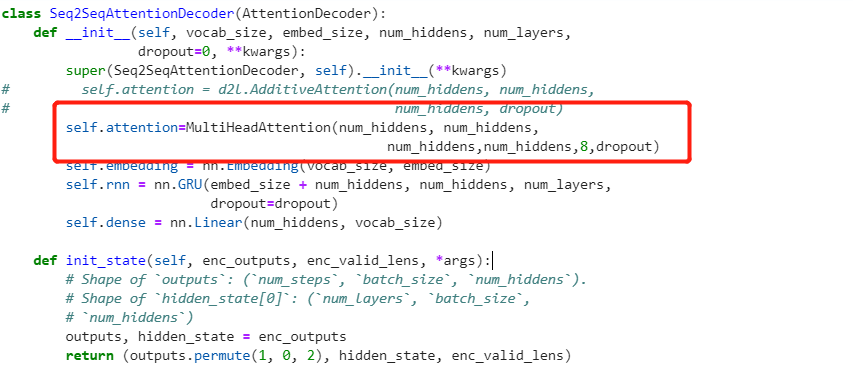

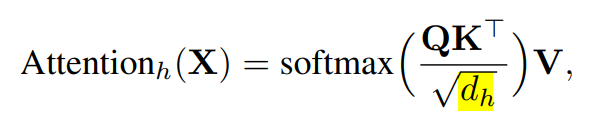

Why denominator in multi-head attention in PyTorch's implementation different from most proposed structure? - PyTorch Forums

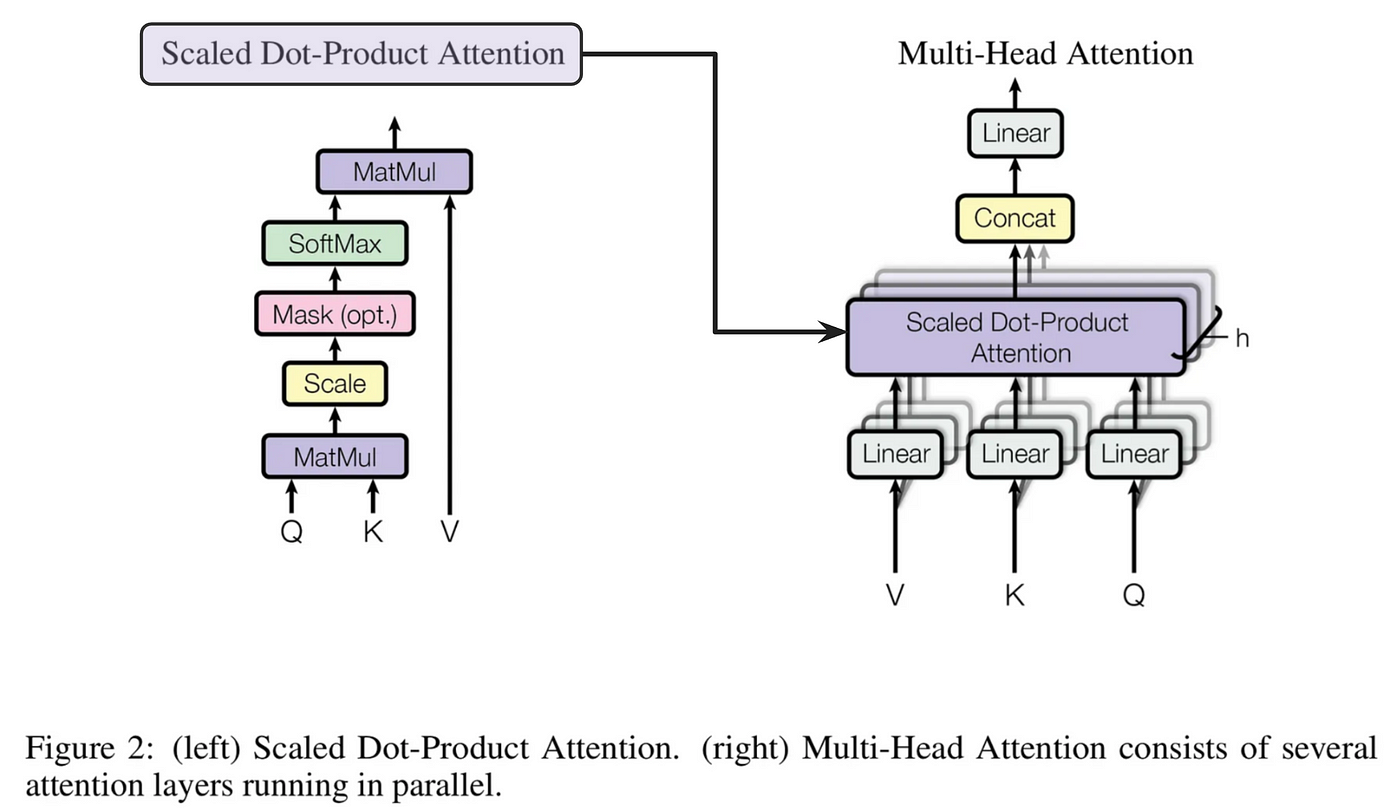

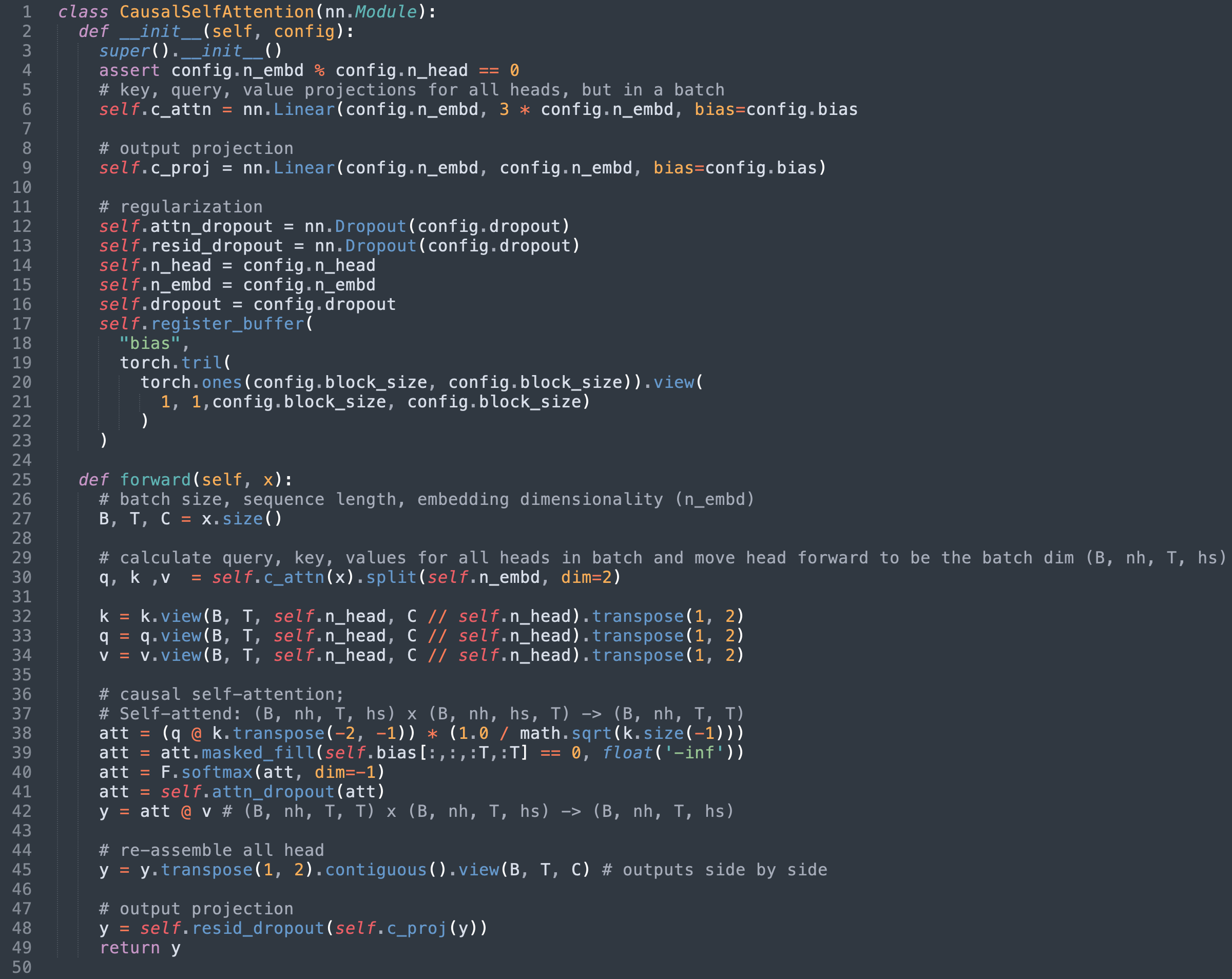

transformer - When exactly does the split into different heads in Multi-Head-Attention occur? - Artificial Intelligence Stack Exchange

Why not use nn.MultiheadAttention in vit? · huggingface pytorch-image-models · Discussion #283 · GitHub