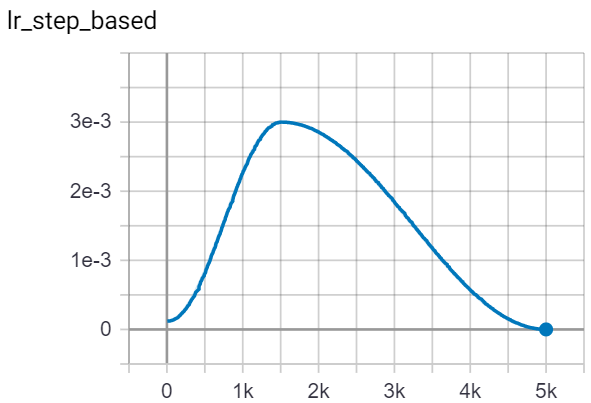

04C_08. Guide to Pytorch Learning Rate Scheduling - Deep Learning Bible - 2. Classification - English

Loss jumps abruptly when I decay the learning rate with Adam optimizer in PyTorch - Artificial Intelligence Stack Exchange

04C_08. Guide to Pytorch Learning Rate Scheduling - Deep Learning Bible - 2. Classification - English

base_lrs in torch.optim.lr_scheduler.CyclicLR gets overriden by parent class if parameter groups have 'initial_lr' set · Issue #21965 · pytorch/pytorch · GitHub

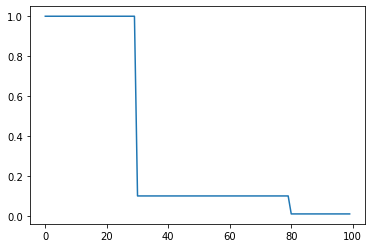

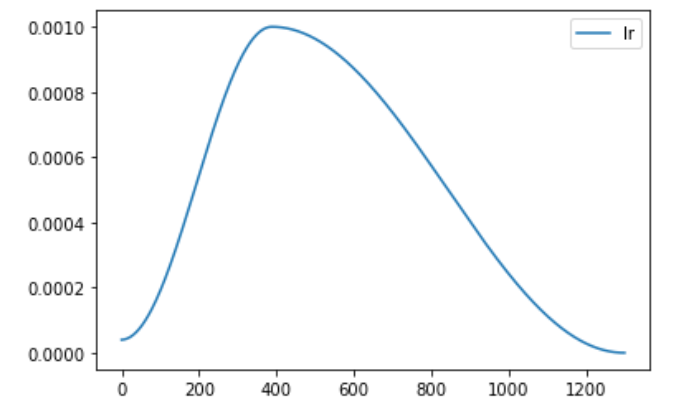

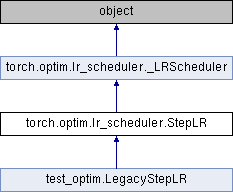

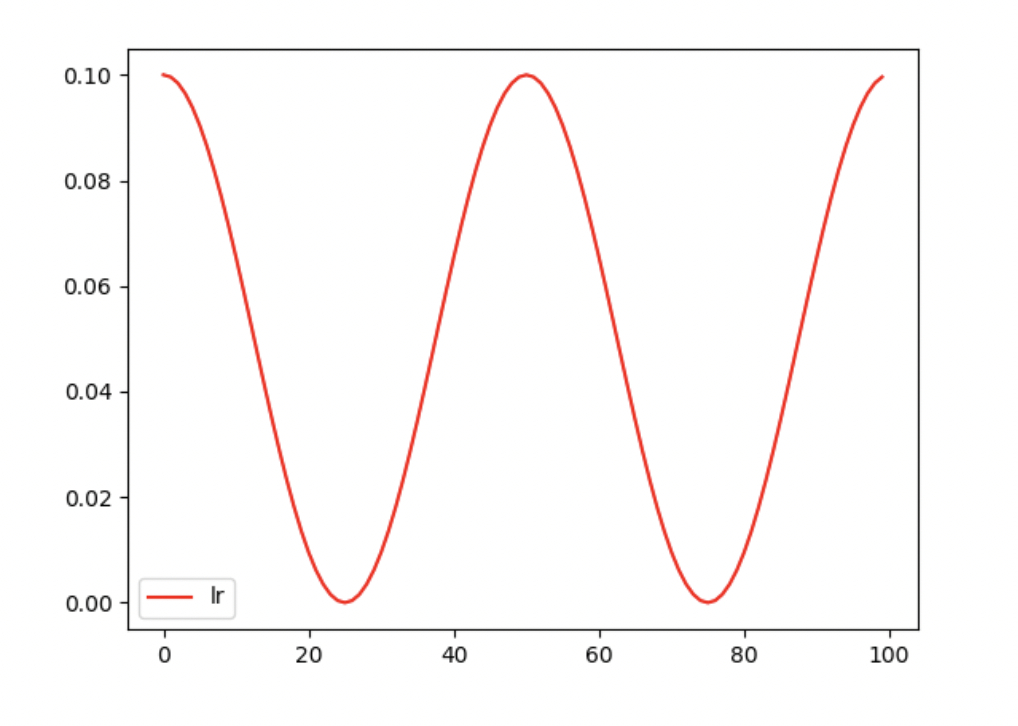

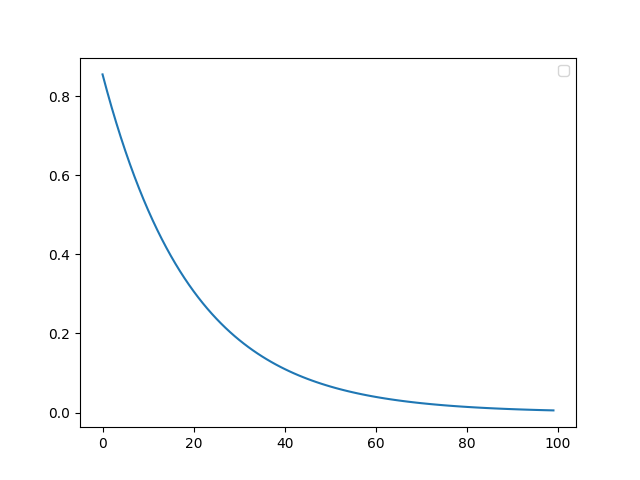

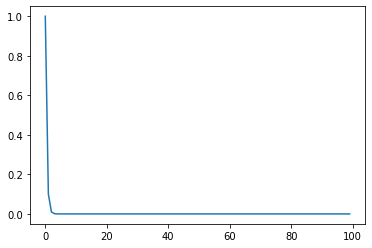

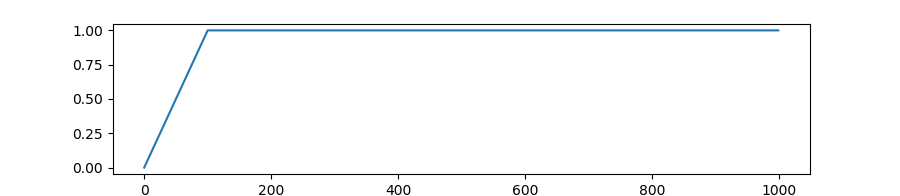

A Visual Guide to Learning Rate Schedulers in PyTorch | by Leonie Monigatti | Dec, 2022 | Towards Data Science

报错解决:UserWarning: Detected call of `lr_scheduler.step()` before `optimizer.step()`. - kkkshiki - 博客园